ICT4LT Module

ICT4LT Module  ICT4LT Module

ICT4LT Module

The aims of this module are for the user to consider key issues in assessing language skills through ICT in order to be able to:

This Web page is designed to be read from the printed page. Use File / Print in your browser to produce a printed copy. After you have digested the contents of the printed copy, come back to the onscreen version to follow up the hyperlinks.

Terry Atkinson, Freelance Educational Consultant, UK/France.

Graham Davies, Editor-in-Chief, ICT4LT Website.

Computer Aided Assessment (CAA) covers a range of assessment procedures and is a rapidly developing area as new technologies are harnessed. In essence, CAA refers to any instance in which some aspect of computer technology is deployed as part of the assessment process. Some of the principle examples of CAA in language learning are:

CAA is more than just a list of possible applications, however. Its importance is intimately bound up with raising achievement, since it can be argued that the role of ICT in raising achievement cannot be fully measured unless ICT is also used in the assessment process.

Just as oral skills cannot easily be assessed by a written test, so there are ICT-specific language skills that cannot easily be assessed through pencil and paper exercises and tests. Consider the following examples:

If students spend time practising the above activities on computers the intention is to raise achievement in language learning at a general level, and this might well be picked up in a conventional test or examination. However, as the balance moves further and most of students' reading is online and most of their writing is computer-based, using paper technology is unlikely to enable students to demonstrate fully their skills, particularly ICT-related ones such as using writing tools - spellchecker, thesaurus, etc. An integrated approach to teaching, learning and assessment is always likely to be more successful than a random approach. Thus, teaching methods, learning methods and assessment methods need to cohere if learners are to learn successfully and if valid and reliable results are to be achieved from assessment procedures. This is true whether or not ICT is to be used - extensive oral practice activities and no oral exam is still a problem in many classes, and so too is extensive use of computers and no opportunity to use computers as part of the assessment process. Moreover, the incentive for teachers to incorporate learning technologies into classwork is reduced if the examinations do not use these technologies or expressly prohibit their use.

Formative assessment: In general, CAA is used mainly for formative assessment rather than summative assessment because it is excellent for giving immediate feedback, e.g. in tests designed to measure students' progress in specific areas, either for self-assessment purposes or for the teacher, e.g. as in placement tests (see Section 1.4).

Summative asssessment: There is a good deal of discussion at present regarding the use of CAA - also dubbed e-assessment - in examinations at the end of a course and in national examinations such as the GCSE examinations in England. It's a controversial topic and has been subjected to a good deal of media hype, with outrageous claims being made regarding its possible uses. To what extent do you think CAA can be used to carry out summative assessment? Jot down your immediate thoughts on this question and the concerns that you might have if your own students were to be assessed in this way. Then read Section 1.3, Which skills can be assessed? Go back to your list of concerns and see which ones have been answered fully, partly or not at all. Send a message to us, using our Feedback Form, if you still have concerns that have not been answered.

Use of CAA for summative assessment is increasing, especially in higher education. Trainee language teachers in England and Wales are now expected to pass computer-based skills tests in literacy, numeracy and ICT, so some of the readers of this module have already experienced summative CAA and will be familiar with the procedures that make it possible. These include:

The above measures can ensure that there is as much security involved as in paper-based exams and the process is not too dissimilar to that used for carrying out orals. Perhaps a more important question is whether computer-based tests can really assess language skills and, if so, which skills are best assessed through CAA and which through other formats. These questions are addressed in the next section. Summative assessment is not likely to be widely implemented in the near future as there are still a number of concerns about its reliability, but it is likely that ingenious solutions and new technologies will bring about a much greater degree of summative assessment than is currently possible. Placement testing and adaptive testing are more likely to be introduced in some areas: see Section 2.3.

| Skill | Assessment by computer | Assessment of electronic output by human being |

| Listening | Computer can assess a limited range of different types of responses to test comprehension. | Listening tests can be presented on a computer, students' answers can be stored electronically and assessed by a teacher. Self-assessment and peer assessment are also possible. |

| Speaking | Very limited as yet. Automatic Speech Recognition (ASR) software is developing rapidly but it is still too unreliable to be used in accurate testing. | Students can record their own voices on a computer for assessment by a teacher. Self-assessment and peer assessment are also possible. |

| Reading | Computer can assess a limited range of different types of responses to test comprehension. | Reading tests can be presented on a computer, students' answers can be stored electronically and assessed by a teacher. Self-assessment and peer assessment are also possible. |

| Writing | Very limited as yet, but spellchecking, grammar checking and style checking are possible, and some progress is being made in the development of programs that can assess continuous text. | Students' answers can be stored electronically and assessed by a teacher. Self-assessment and peer assessment are also possible. |

At a basic level it is simple to assess listening comprehension in much the same way as it is possible to assess reading comprehension, e.g. with multiple-choice, drag-and-drop and fill-in-the-blank tests. If well designed, this form of assessment works effectively and instant feedback can be offered to the student, which has a beneficial effect on learning. The main ways of assessing listening skills can be summarised as follows:

Despite these limitations, assessment of listening comprehension by computer can be of great value to students, offering a form of comprehensible input (Krashen 1985). Moreover, computer-based listening comprehension can combine sound with text, still images, video, animation and on-screen interactivity which creates thereby a much richer environment than is otherwise possible: see Module 2.2, Introduction to multimedia CALL, and Module 3.2, CALL software design and implementation. A measure of student control to allow ease of navigation, options to retry or move to a different section, to attempt different tasks or roles etc are vital to ensure active participation. Good equipment is also vital: headphones, fast network/Internet access and/or networked CD-ROMs.

Limited assessment of speaking skills is possible. Self-assessment and peer assessment can be managed if facilities are available (e.g. microphone and headphones) to allow students to record themselves and listen to the playback. A number of multimedia CD-ROMs have this feature: see Module 2.2. The Encounters series of CD-ROMs, for example, allows students to take part in a role-play by recording their own voices - and re-recording them until they are satisified with the results - and then saving the whole role-play on disc, with their own voices slotted into the appropriate positions in the role-play, for assessment by a teacher. The Encounters series of CD-ROMs was produced by the TELL Consortium, University of Hull and is available from Camsoft.

To assess speaking skills solely by a computer, using Automatic Speech Recognition (ASR), is a very complex task and research in this area is developing rapidly. ASR can be motivating for students working independently, but computers are still not completely reliable as assessors. For further information on ASR see:

See the software for automated testing of spoken English produced by Versant.

At a basic level it is simple to assess reading comprehension in much the same way as it is possible to assess listening comprehension, e.g. with multiple-choice, drag-and-drop and fill-in-the-blank tests. If well designed, this form of assessment works effectively and instant feedback can be offered to the student, which has a beneficial effect on learning. The main ways of assessing reading skills can be summarised as follows:

More extended reading tasks are harder to set on computer. On-screen reading of longer texts is in any case inadvisable. Research indicates that people read around 25%-30% more slowly from a computer screen. Web guru Jakob Nielsen writes:

Reading from computer screens is about 25% slower than reading from paper. Even users who don't know this human factors research usually say that they feel unpleasant when reading online text. As a result, people don't want to read a lot of text from computer screens: you should write 50% less text and not just 25% less since it's not only a matter of reading speed but also a matter of feeling good. We also know that users don't like to scroll: one more reason to keep pages short. [...] Because it is so painful to read text on computer screens and because the online experience seems to foster some amount of impatience, users tend not to read streams of text fully. Instead, users scan text and pick out keywords, sentences, and paragraphs of interest while skipping over those parts of the text they care less about. (Source: Be succinct! Writing for the Web, Alertbox, 15 March 1997.)

More recent research by Nielsen, in which the iPad and Kindle were examined, showed that

The iPad measured at 6.2% lower reading speed than the printed book, whereas the Kindle measured at 10.7% slower than print. However, the difference between the two devices was not statistically significant because of the data's fairly high variability. Thus, the only fair conclusion is that we can't say for sure which device offers the fastest reading speed. In any case, the difference would be so small that it wouldn't be a reason to buy one over the other. But we can say that tablets still haven't beaten the printed book: the difference between Kindle and the book was significant at the p<.01 level, and the difference between iPad and the book was marginally significant at p=.06. (Source: iPad and Kindle reading speeds, Alertbox, 2 July 2010.)

See Nielsen's other articles on Writing for the Web.

Extended texts are more likely to be print-based unless they are in hypertext format, i.e. separate pages linked together as on the Web, or CD-ROM reference materials. In the case of hypertext, the computer may be a suitable medium for assessing information-gathering techniques. The skills needed to track down documents, follow links within and between them and find specific extracts are of increasing importance in academic life, in commercial settings and in leisure time. These skills are, to an extent, generic rather than specific to any given language although learners do need to know key terms involved in searching for information. Many teachers are aware of the need to ensure that learners are equipped with the appropriate language skills for Web browsing, e.g. foreign language terms for help, search, next page, OK etc.

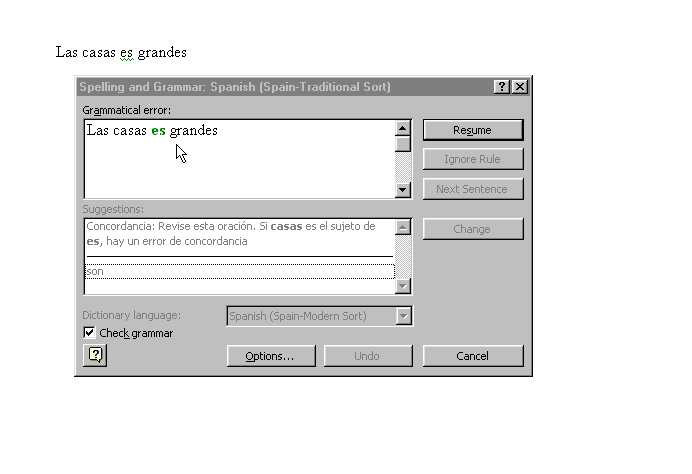

Limited assessment of writing skills is possible. It is fairly straightforward to program computers to assess the accuracy of single words and short sentences typed at the keyboard, and work on parsing students' typed responses, diagnosing errors and providing appropriate feedback is in progress - see Section 8 in Module 3.5, headed Parser-based CALL, and see Heift & Schulze (2003). There are also features in modern computer software that can be used within the assessment process, such as spellcheckers to enable self-assessment of spelling, and also grammar and style checkers, which - although still imperfect - do pick up many errors that students can use to self-correct, such as errors of gender or number: see example below which is a screenshot from Microsoft Word. See Section 6.1, Module 1.3, headed Spellcheckers, grammar checkers and style checkers. As for assessing continuous pieces of text, some progress has been made in developing programs that can roughly grade short essays.

Figure 1: Screenshot, Microsoft Word Spellchecker

The main use of computers in the assessment of writing is, currently, the use of on-screen marking as described in Section 3.

You can read more about grammar and style checkers in:

Computer-based exercises and tests often take the same kind of format. The essential difference between an exercise and a test is the purpose to which it is put. An exercise usually offers instant feedback to the learner and an opportunity to correct any errors that are made, whereas a test may offer little feedback to the learner apart from a raw score at the end of the test, or no feedback at all, e.g. where the results of the test might be stored for analysis by a teacher or examination body. Exercises are usually designed to offer the learner practice in specific areas and to motivate and encourage, whereas tests are usually designed to assess the learner's progress in specific areas, i.e. for self-assessment purposes, for the teacher or for an examination body. But sometimes these distinctions become blurred. The main kinds of tests include:

See Linguanet Worldwide website: This project has recently undergone expansion to incorporate an interface in a number of new languages and addresses in particular the needs of adult learners and independent learners. The site includes advice on ways of assessing and improving one's current ability in different languages (including links to websites that offer diagnostic tests and placement tests), communicating electronically with other language learners and finding appropriate resources. A substantial online catalogue of language learning resources is also being built up.

The Goethe-Institut offers an online placement test for learners of German.

The BBC Languages website offers basic placement tests. Choose the language first then try the placement test.

When reading this section bear in mind the distinction that was made earlier between exercises and tests. See Section 1.4, headed Exericise or test?

The feedback loop refers to the process through which the learner is giving an opportunity to produce an answer and to receive feedback on his/her input. Feedback is essential to learning, but the nature of the feedback is also important and the computer has certain advantages and disadvantages in providing that feedback. See:

See also: Bangs (2003).

The two main advantages of feedback are that it can be given (i) instantly and (ii) in a non-judgemental way. The major disadvantage is that the feedback given may not necessarily be adequately discriminatory or differentiated. It is possible to provide detailed, comprehensive feedback but this is very time-consuming. Consider a multiple-choice test in which four answers are given for each question. There are lots of software packages that allow the authoring of multiple-choice tests of this sort and for specific feedback to be written in for each answer. However, constructing such a test takes a significant amount of time, both in thinking through the details of the feedback and in typing it all in: see Module 2.5 , Introduction to CALL authoring programs. Despite this disadvantage, the two main advantages of feedback do bring excellent learning opportunities which can be authored by anyone with basic computer literacy. Heather Rendall has shown how a very simple program in which jumbled sentences have to be re-ordered can be used to develop grammatical insight: see the report on her research project in Section 5, Module 1.4. See also Rendall (2001).

The immediate assessment that the learner receives provides the key to learning. Consider the following example:

the talks boys teacher to the

The task is to enter the text in the correct order, i.e.

The teacher talks to the boys

Consider what the learner must know in order to get this correct: Meaning? Word order? Verb forms? If the learner gets the answer wrong, he/she will be presented with the correct answer immediately. Whilst this is not the most sophisticated form of feedback, Rendall's research points towards the power of this type of feedback in developing an instinctive grasp of the working of the language. Her subjects justify their thinking by saying "it sounds right", so the effect of feedback is to develop a sense of what is linguistically correct. This takes lots of practice but with sentence forms like the one in the above example, banks of exercises can be quickly generated. In fact, it works best at short sentence or phrase level because that eliminates ambiguity. Here are some grammar points than can be tested through this simple procedure:

Can you draft some examples of the above points for the language(s) that you teach? Can you think of other points that could be tested in this way?

The most common exercise types are listed below:

It should be borne in mind that since the advent of the multimedia computer tests and exercises can contain a variety of different kinds of presentation of the stimulus, student input and feedback, e.g. a multiple-choice test could consist of

The possibilities are enormous. See Section 5, Module 3.2, headed Template examples.

Collections of ready-made exercises exist for the more commonly taught languages. These can be found in software catalogues and are increasingly available online via websites: see the Websites list (below). Many exercises have been developed by teachers and are free for non-commercial use. As an alternative to buying ready-made exercises, it is possible to use authoring programs to develop your own exercises: see Module 2.5, Introduction to CALL authoring programs. The following authoring programs are all in common use.

Hot

Potatoes

An authoring tool that was created by Martin Holmes and Stewart Arneil

at the University of Victoria, Canada, and launched in 1998. It enables the

speedy creation of Web-based exercises for language learners, including multiple

choice, gap-filling, matching, jumbled sentences, crosswords and short text

entry. This authoring tool has proved extremely popular with language teachers

and it continues to be used extensively for the creation of interactive exercises

and tests on the Web. Visit the Hot Potatoes website to find out more,

download the software and see lots of examples: http://hotpot.uvic.ca.

See Winke & MacGregor (2001) for a review of Hot

Potatoes.

Quia

This is an online provider of exercises. There are many language learning exercises

which are free to use. It is also possible to develop your own exercises. It

is very easy to do this and to store the exercise on the Quia website,

although subscription charges may apply: http://www.quia.com

Fun With Texts

This is one of the most widely used authoring packages

for Modern Foreign Languages in UK secondary schools and there are many ready-made

exercises available as well as full authoring functions within the program.

Its use is primarily in total text reconstruction - so-called total Cloze

- but it includes other exercise types too. Version 4.0 includes multimedia

enhancements that allow you to integrate images and audio and video recordings

into the exercises. See Section 8, Module

1.4, headed Text manipulation, and see http://www.camsoftpartners.co.uk/fwt.htm

Question Mark

The Question Mark company develops software for presenting interactive

exercises and gathering/collating test information: http://www.questionmark.com

TaskMagic

An authoring tool, produced by mdlsoft,

for the creation of a variety of exercise types, including.text match, picture

match, sound match, picture-sound match, grid match, mix and gap, exercises

based on dialogues.

Websites

See the Websites list (below).

The Council of Europe's Common European Framework of Reference for Languages (usually known as the CEFR or CEF) provides a common basis for the elaboration of language syllabuses, curriculum guidelines, examinations and textbooks across Europe, based on research conducted by the Council of Europe dating back to the 1970s. It describes in detail what language learners have to learn to do in order to use a language for communication and what knowledge and skills they have to develop in order to be able to act effectively. The description also covers the cultural context in which language is set. The CEFR defines six reference levels of proficiency, which allow learners’ progress to be measured at each stage of learning and on a life-long basis. The Council of Europe's publications specify the number of guided learning hours (GLH) for the six levels, e.g.

The Waystage publication (1990 edition): "The learning-load involved is estimated to be about half of that required by Threshold Level 1990, which means that we think that, with proper guidance, the average learner should be able to master it in some 180-200 guided learning-hours, including independent work." (p. 2 of this 154-page publication: ISBN 92-871-2002-1)

The following table shows the correspondences between the six CEFR Levels, Cambridge General English Exams, the Languages Ladder, National Curriculum (England), National Examinations (England) and the number of guided learning hours required for each level. Note: "England", not Wales, Scotland and Northern Ireland, which have different curricula and examination schemes - although they are broadly similar in most respects.

|

CEFR Level

|

Cambridge General English Exams |

Languages

Ladder |

National

Curriculum (England) |

National

Exams (England)

|

Guided

Learning Hours |

| A1 Breakthrough |

1-3 |

1-3 | NQF Entry Level 1-3 | Approx 90-100 hours | |

| A2 Waystage |

Key English Test (KET) | 4-6 Preliminary |

4-6 | Foundation GCSE | Approx 180-200 hours |

| B1 Threshold |

Preliminary English Test (PET) | 7-9 Intermediate |

7-8 & EP* | Higher GCSE | Approx 350-400 hours |

|

B2 |

First Certificate in English (FCE) | 10-12 Advanced |

AS / A / AEA Level | Approx 500-600 hours | |

| C1 Proficiency |

Certificate in Advanced English (CAE) | 13 Proficiency |

University Degree Level and above | Approx 700-800 hours | |

| C2 Mastery |

Certificate of Proficiency in English (CPE) | 14 Mastery |

University Degree Level and above | Approx 1000-1200 hours |

The guided learning hours required may vary, of course, depending upon other factors such as the learner’s mother tongue, other languages already learned, the intensity of the course being followed, the inclination and age of the learner, and the amount of exposure to the language outside lesson times, particularly if the course takes place in a country where the language is spoken.

The CEFR document can be downloaded from the Council of Europe's CEFR website. A Self-assessment Grid relating to the CEFR is available as a Word DOC file. See also the Council of Europe's Language Policy Division website.

Many European countries have already adopted the CEFR as a standard for assessing language proficiency. In the UK the Languages Ladder leans heavily upon the CEFR. Although the Languages Ladder appears to duplicate to some extent what the CEFR has already produced, it offers a new approach to measuring proficiency in the four skills. The Asset Languages assessment scheme is related closely to the Languages Ladder and is designed to provide accreditation options for learners of all ages and abilities from primary to further, higher and adult education. Like the CEFR, the Asset Languages scheme offers sets of "Can Do" Statements that describe what the learner is capable of doing in the different skills.

The Cambridge University ESOL website is very informative regarding the CEFR, especially these pages:

Common European Framework of Reference (CEFR) for Languages, which describes the relationship of the Cambridge ESOL examinations to the CEFR.

General English overview FAQs - frequently asked questions relating to the Cambridge General English examinations.

See the website of the Association of Language Testers in Europe (ALTE). ALTE also provides a comprehensive list of "Can Do" Statements.

What level of competence have students reached and how can it be assessed? As learners move through the system the need for accurate information on the level reached is vital. Increasingly, computers are being used for diagnostic testing.

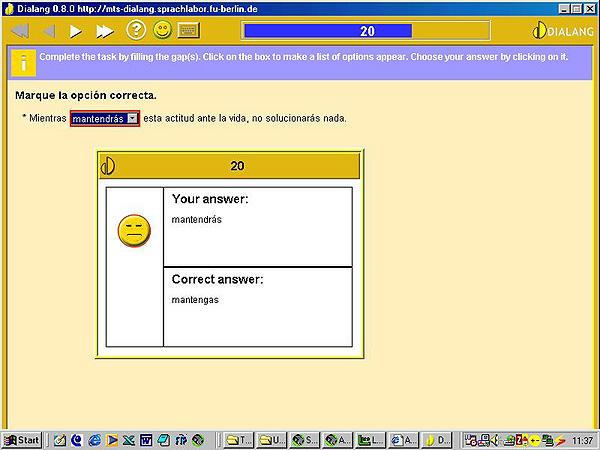

DIALANG was a major EU-funded project aiming to provide effective diagnosis of language competence in 14 EU languages, based on the Common European Framework of Reference for Languages: see Section 2.2. Tests for Listening, Writing, Reading, Structures and Vocabulary were created for 14 languages: Danish, Dutch, English, Finnish, French, German, Greek, Icelandic, Irish, Italian, Norwegian, Portuguese, Spanish and Swedish. The website is under reconstruction and not accessible at present, but you can read about DIALANG in Wikipedia: http://en.wikipedia.org/wiki/DIALANG

Figure 2: Screenshot, DIALANG

The EU-funded WebCEF project (2006-2009) aims to enable the collaborative assessment of oral language proficiency through a Web-based environment: http://www.webcef.eu

Language learners and language teachers will be able to evaluate their own video and audio samples together with colleagues and peers across Europe. Like DIALANG this project is also based on the descriptors of the Common European Framework of Reference for Languages: see Section 2.2.

Registered members of the WebCEF community can create, upload, rate and comment on video and audio samples. Using low-threshold technology, they can create their own video and audio samples to be assessed by a community of language learners across Europe.

WebCEF gives:

Adaptive tests aim to assess language competence by asking questions based on the student's response to previous ones. If the student gets the answer right, a harder question is asked and vice-versa. This process claims to quickly find the level of a student in order to assist schools in placing the student in a suitable class. Brigham Young University in the US has pioneered web-based adaptive language testing for placement using its WebCAPE system. An example in Spanish is shown below:

Figure 3: Screenshot, WebCAPE

For a full discussion of computer adaptive testing in language learning see Chalhoub-Deville (1999).

This form of testing may become much more common in the future. As noted earlier, Examination Boards are already experimenting with computer-based examining for public examinations such as GCSE and A level. It may soon be commonplace for schools to decide on setting of students by such methods. Teacher training departments may use this form of testing for assessment or self-assessment of trainees' language skills.

BBC Languages: Basic tests in a variety of languages to enable learners to assess their knowledge, plus a range of online courses at different levels: http://www.bbc.co.uk/languages/

The Council of Europe European Language Portfolio provides an attractive, portable and motivating way for individual learners to maintain an up-to-date record of their language learning experience and to track their changing levels of performance. It also contains can-do statements which help learners to assess their language skills: http://www.coe.int/t/dg4/linguistic/

Goethe-Verlag: Free tests in 24 languages and 552 language combinations: http://www.goethe-verlag.com/tests/

TOEFL: A large market clearly exists for testing English language competence and there is already a thriving computer-based testing industry. See the Test Of English as a Foreign Language website: http://www.toefl.org/

Versant Tests: Formerly known as the Spoken English Tests (SET), the Versant Tests are delivered over a telephone or on a computer and scored by computer. Once administered, numeric scores and performance levels that describe the test-taker's ability to understand and speak the selected language are generated within minutes and can be viewed online. Tests can be taken anytime, anywhere: http://www.versanttest.co.uk/

See the website of Linguanet Worldwide. This project has recently undergone expansion to incorporate an interface in a number of new languages and addresses in particular the needs of adult learners and independent learners. The site includes advice on ways of assessing and improving one's current ability in different languages (including links to websites that offer diagnostic and placement tests), communicating electronically with other language learners and finding appropriate resources. A substantial online catalogue of language learning resources is also being built up here: http://www.linguanet-worldwide.org/

See also Graham Davies's Favourite Websites page, which contains an extensive list of websites that offer interactive exercises and tests.

Contents of Section 3

Once upon a time students wrote in exercise books in blue pen and teachers collected these up and marked them in red pen. Although it is true that much learning occurs through considering mistakes, the problem with the above system was that the students did not always have any real incentive to look back at their mistakes and correct them; they were only interested in their overall mark.

As the story moves on, students began to use computers to type their work, print it and hand it in to their teacher who would then mark the work, again with a red pen. The main gain here is the eyesight of the teacher, who now marks printed text rather than handwriting, which is not always easy to decipher.

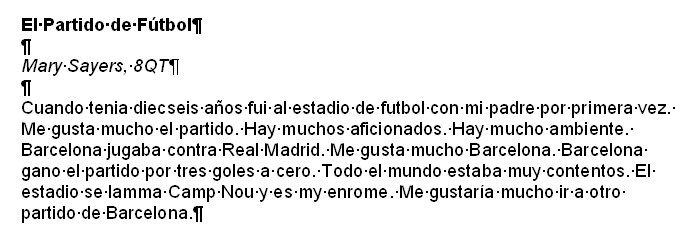

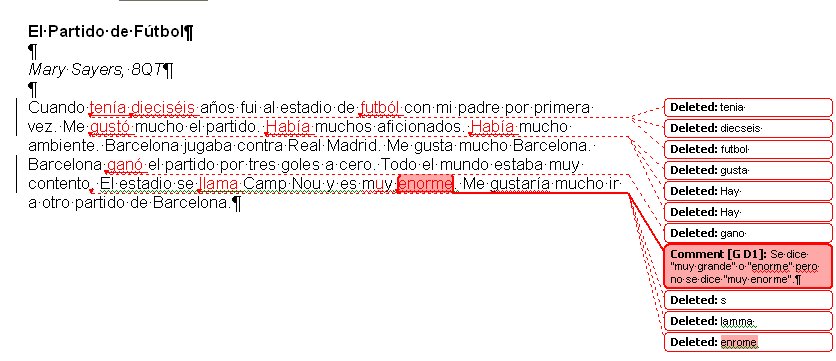

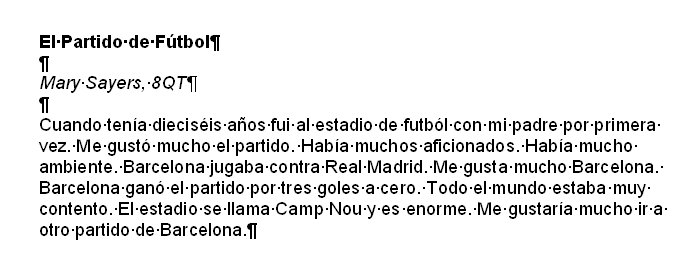

As word-processing software has developed, new tools have become available that make it possible for students to type their assignments and submit them to their teacher electronically - via the Web, email or over a local network. The teacher can now use tools in modern word-processing packages to mark the work electronically. The screenshots below (Figures 4, 5 and 6) show the process of marking a composition in Spanish.

|

Figure 4: Microsoft Word screenshot, showing student's original, uncorrected work

Figure 5 shows corrections that have been made using the Tools / Track Changes facility in Microsoft Word and the facility for inserting a comment using Insert / Comment.

|

Figure 5: Microsoft Word screenshot, showing teacher's corrections and a comment

The teacher can then return the work electronically to the student. The process does not end there, for there is now an incentive for the student to consider and accept the teacher's comments and then print a final, error-free product (Figure 6).

|

Figure 6: Microsoft Word screenshot, showing student's corrected work

Marking using the revision facilities in Word

You have seen above a piece of written work in Spanish, with the teacher's corrections and one comment already entered. Have a look at the corrections and the comment. Now choose one of the following pieces of work by students of a range of languages to mark electronically or, if you have samples from your own students, use one of them.

When you have marked the work, it can be sent back to the student by email or across the school's network. The student can redraft the text incorporating your suggestions.

As an alternative to using word-processing to mark students' work, there is specialist software that offers additional facilities, such as Markin. The website for this package describes it as follows:

Markin is a Windows program which runs on the teacher's computer. It can import a student's text for marking by pasting from the clipboard, or directly from a document file. Once the text has been imported, Markin provides a comprehensive set of tools enabling the teacher to mark and annotate the text. When marking is complete, Markin saves it as an XHTML document, in which the teacher's marks and annotations appear as coloured text. When the student opens this document in a web browser (such as Internet Explorer, Firefox, Safari or Chrome), they can click on the marks to reveal more detail about the nature of the teacher's annotation or comment. Markin can also export the marked work as a RTF file, which is more suitable for students who want to view it as a printout.

Figure 7: Screenshot from Markin

This software might be easier to use for those not experienced with word-processing and email since it is a ready-made solution.

Imagine that you are a student whose

work has been marked electronically, you receive the work back from your teacher

and go through the following process. You can use any of the files that you

corrected in the previous exercise (see above):

How did this seem from a student perspective? Does it engage the student with the corrections more than conventional paper and pen marking?

Marking using word-processing may seem slow at first and if you can't type quickly, it may take longer than conventional marking. One way to overcome this, especially if you frequently make the same comments, is to use the autotext function in Word or its equivalent in other packages. This is a feature which allows you to set up coded comment, e.g. SP to stand for Spelling Mistake. Of course, you may have trained your students to recognise such codes but using autotext allows you to type in the abbreviation SP and have the computer convert it to Spelling Mistake. Here's how to do it:

See Bishop (2004). Graham Bishop covers the use of the following tools that are available in Microsoft Word:

What is ethically acceptable?

Automatic generation of student reports by computer carries with it obvious dangers in regard to quality assurance and security. For that reason, there is not much actual implementation of such schemes. However, computers can be used to support report writing, to provide templates, a bank of comments and automated comments without surrendering the teacher's normal responsibility for the task.

What will be possible within an overall school policy?

Where schools have adopted computerised reporting systems, it may not be possible to use standard features of word-processing as described below. Inevitably, any system allows only specified features.

What does the technology offer?

There are a number of facilities built into standard word-processing packages that can be exploited for report writing. These include:

You will find a cateogrised Comment Bank for Report Writing here, which was compiled by student teachers: Comment Bank.

See also:

You can experiment with using these resources to write imaginary reports. You can also make up your own comment bank using similar categories and adapting the comments for your own students. You can use autotext to automate the process of typing in the most commonly used comments.

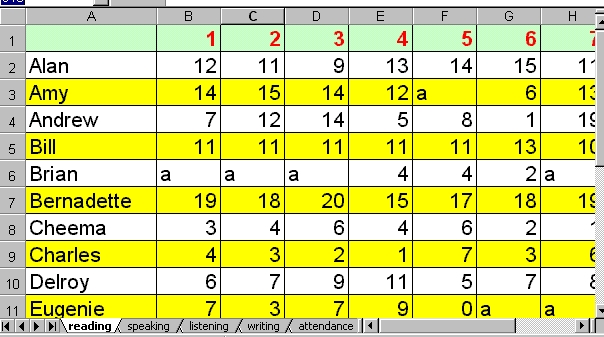

Recording progress electronically can help make the process more efficient and can make the information collected more versatile. The downside of this is security: Is the information stored as securely, at least, as it would be in a conventional markbook and is it protected from loss, erasure, etc? There is also the question of being able to use the information meaningfully. Some school-wide systems demand a lot of energy for the inputting of information that cannot readily be extracted by teachers. Sophisticated, custom-designed systems may be less helpful to use than a simple spreadsheet, for which the following examples apply.

Using a spreadsheet as an electronic mark book enables you to:

add up marks and do percentages, averages, etc

The file for this was created in Microsoft Excel and can be fully viewed by downloading the XLS file below. The file is also available in CSV format for loading into other spreadsheets. Note the bottom row of the window in the screenshot (Figure 8), which displays tabs for accessing different pages of the mark book for reading, speaking listening, writing and attendance. This facility is not accessible via the CSV file.

Sample markbook files:

Open the file and have a look at how it works. Try some of the following:

Make a list of the advantages and disadvantages to you in your current teaching situation of an electronic markbook compared to a paper-based one. Which are you going to use in future? Note that even if you don't use a spreadsheet for a markbook, it still provides a very useful format for generating a paper based loose-leaf markbook.

Keeping students' records is a facility available in various computer assisted language learning programs. Multimedia CD-ROM's often have this facility and it is usually possible then for the teacher to interrogate the records to check on students' progress. Some software incorporates an email facility through which student records are automatically forwarded to the teacher via email at the end of a session. This is available as an option with registered copies of Hot Potatoes. These individual records are important to track what students have been doing in CALL sessions since there is often no other record available.

Increasingly, modern languages departments have their own website or section at their school or college site. To what extent can a website be used to manage the assessment of language students? For example:

Take a Hot Potatoes test on Computer Aided Assessment by clicking here: CAATest.htm. The answers can all be found in Module 4.1!

There is an increasing number of websites that offer distance learning materials, including whole courses delivered via the Web and email, using so-called Virtual Learning Environments (VLEs). See the following modules that deal in more detail with the Web, distance learning and VLEs:

It takes many hours of study and practice to acquire a reasonable level of proficiency in a foreign language. This represents a substantial investment for any organisation considering the selection of people for language training. It would therefore be useful to predict with reasonable accuracy to what extent an individual who had never studied a language before would benefit from attending a language course. Businesses often underestimate how long it takes to acquire a reasonable level of proficiency in a foreign language. The Council of Europe has calculated, for example, that it requires 180-200 learning hours to achieve Waystage (A2) level and 350-400 hours to achieve Threshold (B1) level with the Common European Framework of Reference for Languages scheme, Threshold level being the level at which the learner begins to communicate with a certain degree of confidence. See Section 2.2.

It can take a lot longer to reach an operational level for a particular profession. Airline pilots have to be able to communicate in English, and other staff employed in the airline industries are expected to be be proficient in foreign languages. An international railway operator requires its English-speaking train drivers to follow a 600-hour course (plus homework) in French to get them from scratch to operational level. The training includes a 3-week residential course in France. An important factor to bear in mind here is the safety factor. A driver who misunderstands an instruction in French while he is driving a train at 300 kilometres per hour is simply not acceptable as a member of the profession. Language tests in such professions are therefore rigorous, and it would obviously be useful to predict individuals' aptitude for learning a foreign language before sending them off to follow an expensive course.

Success in learning a foreign language depends on a number of basic aptitudes, for example:

But factors other than aptitude often make a successful linguist, e.g. attitude to learning, a good learning style, interpersonal communication skills, intercultural competence, etc: see Skehan (1989) & Skehan (1998).

There is a useful collection of articles on Modern Language Aptitude Testing (MLAT) in Volume 9, Nos. 1 & 2 (1998) of Applied Language Learning, a journal published by the Defense Language Institute Foreign Language Center, Monterey, California, USA. Available at: http://www.dliflc.edu/archive/documents/all9_new.pdf

The website of Second Language Testing Inc (SLTI) has comprehensive information on Language Aptitude Testing.

The Language Aptitude Tests suite by Paul Meara, James Milton & Nuria Lorenzo-Dus (2001) consists of five discrete tests:

See also:

Language

Aptitude Test for Entrance in Classics, Oxford University, 1995.

Most teachers have been confronted at some time with a piece of work that contains elements that have been copied from another source without due acknowledgement. Arguably, plagiarists are sophisticated cheats, but at secondary school language learning level they are more commonly naive incompetents who think that copying a large chunk of text from a website or CD-ROM is somehow going to get them a good mark. New technologies have made plagiarism easier, but they have also made its detection easier. Responding to plagiarism requires a two-tier strategy: how to catch the cheat and how to train learners to use source material appropriately. Academic plagiarism is the subject of a publication by Decoo & Colpaert (2002).

Detecting plagiarism

The advent of the information age and the course requirements of GCSE and A-Level examinations called for new skills for language students. It is important for students to be able to evaluate information, select appropriately and acknowledge sources that they have quoted. Providing examples and practice in this can be a proactive way of preventing naive plagiarism. However, there is a dearth of good advice for students, which reflects the relative novelty of the problem. Teachers will probably be able to detect readily any occurrence of plagiarism in foreign language work because they know their students' level of language, but it is also useful for them to know how to use a search engine to detect copying from websites and to find the precise site from which the copying has occurred. An easy strategy is to type in a section of the suspected text into the search line of a search engine such as Google, enclosing the text in speech marks. Five to ten words should be sufficient. With luck, the search engine will find the text on the website it was copied from. There are many tools that help detect plagiarism, for example:

See also:

Copyright infringement is a growing problem, which we deal with in detail in our General guidelines on copyright. See also:

Bangs P. (2003) "Engaging the learner - how to author for best feedback". In Felix U. (ed.) Language learning online: towards best practice, Lisse: Swets & Zeitlinger.

Bishop G. (2004) "First steps towards electronic marking of language assignments", Language Learning Journal (ALL) 29: 42-46.

Brown J. (1997) "Computers in language testing: present research and some future predictions", Language Learning and Technology 1, 1: 44-59. Available at: http://llt.msu.edu/vol1num1/brown/default.html

Chalhoub-Deville M. (ed.) (1999) Issues in computer adaptive testing of reading proficiency, Cambridge: Cambridge University Press.

Chalhoub-Deville M. (2001) "Language testing and technology: past and future", Language Learning & Technology 5, 2: 95-98. Available at: http://llt.msu.edu/vol5num2/deville/default.html

Council of Europe (2001) Common European Framework of Reference for Languages, Cambridge: Cambridge University Press. ISBNs: Hardback 0521803136, Paperback 0521005310. The CEFR document can be downloaded from the Council of Europe's CEFR website. A Self-assessment Grid relating to the CEFR is available as a Word DOC file. See also the Council of Europe's Language Policy Division website.

Decoo W. & Colpaert J. (2002) Crisis on campus: confronting academic misconduct, MIT Press / Bradford Books.

Department for Education and Skills - now known as the Department for Children, Schools and Families (2002): Languages for all: languages for life - a strategy for England.

Dunkel P. (1999) "Considerations in developing or using second / foreign language proficiency computer-adaptive tests", Language Learning & Technology 2, 2: 77-93. Available at at: http://llt.msu.edu/vol2num2/article4/

Godwin-Jones R. (2001) "Language testing tools and technologies", Language Learning & Technology 5, 2: 8-12. Available at: http://llt.msu.edu/vol5num2/emerging/default.html

Heift T. & Schulze M. (eds.) (2003) Error diagnosis and error correction in CALL, CALICO Journal Special Issue 20, 3.

Krashen S. (1985) The input hypothesis: issues and implications, London: Longman.

Language Learning and Technology 5, 2 (2001) Special Issue on Computer Assisted Language Testing. Available at: http://llt.msu.edu/vol5num3/default.html

Nielsen J. (1995) Multimedia and hypertext: the Internet and beyond, Academic Press: Boston.

Nielsen J (1997) Be succinct! Writing for the Web, Alertbox, 15 March 1997.

Nielsen J. (1998) Fighting Linkrot, Alertbox, 14 June 1998.

Nielsen J. (2010) iPad and Kindle reading speeds, Alertbox, 2 July 2010.

Meara P., Milton J. & Lorenzo-Dus N. (2001) Language Aptitude Tests (book and CD-ROM), Newbury: Express Publishing. Search for the title "Language Aptitude Tests" in the Catalogue section at the Express Publishing website.

Pachler N. & Byrom K. (1999) Assessment of and through ICT . In Leask M. & Pachler N. (eds.) Learning to teach using ICT in the secondary school, London: Routledge .

Rendall H. (2001) Developing a sense of gender in French: ICT integration at initial learner level. In Atkinson T (ed.) Reflections on ICT, London: CILT.

Roever C. (2001) "Web-based language testing", Language Learning and Technology 5, 2: 84-94. Available at: http://llt.msu.edu/vol5num2/roever/default.html

Skehan P. (1989) Individual differences in second-language learning, London: Edward Arnold.

Skehan P. (1998) A cognitive approach to language learning, Oxford: OUP.

Winke P. & MacGregor D. (2001) Review of Hot Potatoes, Language Learning and Technology 5, 2: 28-33. Available at: http://llt.msu.edu/vol5num2/review3/default.html

CAA Centre website: A site that has been designed to provide general information and guidance on the use of Computer Aided Assessment (CAA) in higher education.

Council of Europe, Common European Framework of Reference for Languages

Graham Davies's Favourite Websites: An extensive list of websites relating to language learning and teaching, including many links to websites that offer interactive exercises and tests.

Language Testing Resources: A comprehensive and very interesting set of Web pages maintained by Glenn Fulcher at the University of Leicester. Extensive information about all aspects of language testing.

If you wish to send us feedback on any aspect of the ICT4LT website, use our online Feedback Form or visit the ICT4LT blog.

The Feedback Form and a link to the ICT4LT blog can be found at the bottom of every page at the ICT4LT site.

Document last updated 19 April 2012. This page is maintained by Graham Davies.

Please cite this

Web page as:

Atkinson T. & Davies G. (2012) Computer Aided Assessment (CAA) and language

learning. Module 4.1 in Davies G. (ed.) Information and Communications Technology

for Language Teachers (ICT4LT), Slough, Thames Valley University [Online].

Available at: http://www.ict4lt.org/en/en_mod4-1.htm

[Accessed DD Month YYYY].

© Sarah Davies in association with MDM creative. This work is licensed under a

Creative

Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License.